Build Pipeline

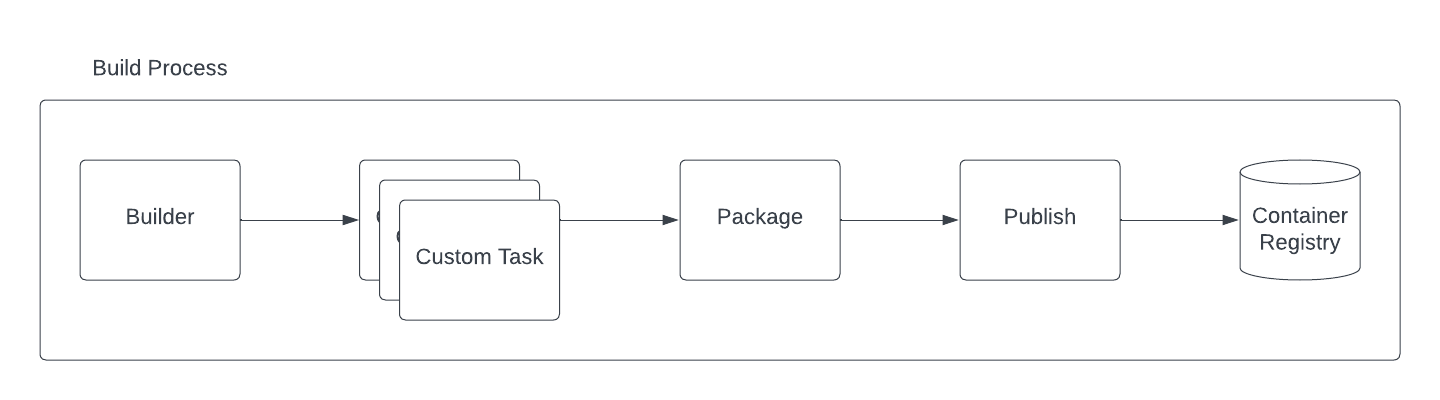

Since version 2.0, we’ve introduced the concept of Pipeline in order to provide a degree of flexibility for those user that want to customize the entire building process. We can think of a pipeline as a series of tasks that can be executed to generate a Camel application project, build it accordingly and publish into a container registry.

Camel K operator creates a default pipeline containing an opinionated build, package and publish operations. Beside them you can include any additional task in order to enrich the building process:

The main 3 tasks are required by Camel K to perform a build and provide a container image which will be used to start a Camel application. The build is a Maven process which is in charge to create a maven project based on the Integration sources provided.

If you’re building a Quarkus Native image, the quarkus trait influences the builder in order to include a custom task (named quarkus-native) which takes care of executing the native image compilation. But this is completely hidden to the final user.

Once the build is over, the package task takes care to prepare the context later required by the publishing operations. Basically this task is in charge to copy all artifacts and also prepare a Dockerfile which could be used eventually by the rest of the process. Finally, the publish task is in charge to generate and push the container to the registry using all the artifacts generated in the package task.

The custom tasks can be provided at any point of the pipeline (read task sorting section). With them we are introducing that level of flexibility required to accommodate any company build process. This part is optional, by default, Camel K does not need any custom task.

Add custom user tasks

The final user can include any optional task, which, by default is run after a build operation is performed. We think this is the best moment when any custom operation can be performed as it is the immediate step after the Maven project is generated. And ideally, the context of any custom operation is the project.

Custom tasks are only available using builder pod strategy in order to let the user provide each task with the tools required to run the specific customization.

Let’s see an example:

spec:

tasks:

- builder:

...

- custom:

command: tree

image: alpine

name: custom1

userId: 0

- custom:

command: cat maven/pom.xml

image: alpine

name: custom2

- jib:

...The custom tasks will be executed in the directory where the Camel K runtime Maven project was generated. In this example we’re creating 2 tasks to retrieve certain values from the project just for the scope of illustrating the feature. For each task you need to specify a name, the container image which you want to use to run, the command to execute and (optionally) the user ID that has to run in the container.

The goal is to let the user perform custom tasks which may result in a success or a failure. If the task executed results in a failure, then, the entire build is stopped and fails accordingly.

Configuring via builder trait

We are aware that configuring the Build type directly is something that the majority of users won’t do. For this reason we’re enabling the same feature in the Builder trait.

Maintaining the example above as a reference, configuring a custom task will be as easy as adding a trait property when running your Integration, for instance, via CLI:

kamel run Test.java -t builder.tasks=custom1;alpine;tree;0 -t builder.tasks="custom2;alpine;cat maven/pom.xml"Another interesting configuration you can provide via Builder trait is the (Kubernetes requests and limits. Each of the task you are providing in the pipeline, can be configured with the proper resource settings. You can use, for instance the -t builder.request-cpu <task-name>:1000m to configure the container executed by the task-name. This configuration works for all the tasks including builder, package and the publishing ones.

Getting task execution status

Although the main goal of this custom task execution is to have a success/failure result, we thought it could be useful to get the log of each task to be consulted by the user. For this reason, you will be able to read it directly in the Build type. See the following example:

conditions:

- lastTransitionTime: "2023-05-19T09:56:02Z"

lastUpdateTime: "2023-05-19T09:56:02Z"

message: |

...

{"level":"info","ts":1684490148.080175,"logger":"camel-k.builder","msg":"base image: eclipse-temurin:11"}

{"level":"info","ts":1684490148.0801787,"logger":"camel-k.builder","msg":"resolved base image: eclipse-temurin:11"}

reason: Completed (0)

status: "True"

type: Container builder succeeded

- lastTransitionTime: "2023-05-19T09:56:02Z"

lastUpdateTime: "2023-05-19T09:56:02Z"

message: |2

│ │ ├── org.slf4j.slf4j-api-1.7.36.jar

│ │ └── org.yaml.snakeyaml-1.33.jar

│ ├── quarkus

│ │ ├── generated-bytecode.jar

│ │ └── quarkus-application.dat

│ ├── quarkus-app-dependencies.txt

│ └── quarkus-run.jar

└── quarkus-artifact.properties

21 directories, 294 files

reason: Completed (0)

status: "True"

type: Container custom1 succeeded

- lastTransitionTime: "2023-05-19T09:56:02Z"

lastUpdateTime: "2023-05-19T09:56:02Z"

message: |2-

</properties>

</configuration>

</execution>

</executions>

<dependencies></dependencies>

</plugin>

</plugins>

<extensions></extensions>

</build>

</project>

reason: Completed (0)

status: "True"

type: Container custom2 succeeded

- lastTransitionTime: "2023-05-19T09:56:02Z"

lastUpdateTime: "2023-05-19T09:56:02Z"

message: |

...test-29ce59bf-178f-4c4f-9d12-407461533e2a/camel-k-kit-chjkf0vkglls73fhp9lg:339751: digest: sha256:62d184a112327221e5cac6bea862fc71341f3fc684f5060d1e137b4b7635db06 size: 1085"}

reason: Completed (0)

status: "True"

type: Container spectrum succeededGiven the limited space we can use in a Kubernetes custom resource, we are truncating such log to the last lines of execution. One good strategy could be to leverage reason where we provide the execution status code (0, if success) and use an error code for each different exceptional situation you want to handle.

If for any reason you still need to access the entire log of the execution, you can always access to the log of the builder Pod and the specific container that was executed, ie kubectl logs camel-k-kit-chj2gpi9rcoc73cjfv2g-builder -c task1 -p

Tasks filtering and sorting

The default execution of tasks can be changed. You can include or remove tasks and provide your order of execution. When using the builder trait, this is done via builder.tasks-filter property. This parameter accepts a comma separated value list of tasks to execute. It must specify both operator tasks (ie, builder) and custom tasks.

| altering the order of tasks may result in a disruptive build behavior. |

With this approach you can also provide your own publishing strategy. The pipeline run as a Kubernetes Pod. Each task is an initContainer and the last one is the final container which has to provide the published image digest into the /dev/termination-log in order to know the publish was completed. This is done out of the box when a supported publishing strategy is executed. If you want to provide your own publishing strategy, then, you need to make sure to follow this rule to have the entire process working (see examples later).

There are certain environment variables we are injecting in each custom container in order to be able to get dynamic values required to perform certain operation (ie, publishing a container image):

-

INTEGRATION_KIT_IMAGE contains the name of the image expected to be used for the IntegrationKit generated during the pipeline execution

We may add more if they are required and useful for a general case.

Execution privileges

The builder Pod will set a PodSecurityContext with a specific user ID privileges. This is a convention in order to maintain the same default user ID for the container images we use by default. Each container image will inherit this value unless specified with the user ID parameter. The value is by default 1001 unless you’re using S2I publishing strategy (the default strategy for Openshift), where the value is defined by the platform.

| you may run your task with root privileges using user ID 0. However, this is highly discouraged. You should instead make your container image being able to run with the default user ID privileges. |

Custom tasks examples

As we are using container registry for execution, you will be able to execute virtually any kind of task. You can provide your own container with tools required by your company or use any one available in the OSS.

As the target of the execution is the project, before the artifact is published to the registry, you can execute any task to validate the project. We can think of any vulnerability tool scanner, quality of code or any other action you tipically perform in your build pipeline.

Use your own publishing strategy

We suggest to use the supported publishing strategy as they are working out of the box and optimized for the operator. However you may choose to adopt your own publishing strategy. Here an example to run an Integration using a Buildah publishing strategy:

$ kamel run test.yaml -t builder.strategy=pod -t builder.base-image=docker.io/library/eclipse-temurin:17 -t builder.tasks="my-buildah;quay.io/buildah/stable;/bin/bash -c \"cd context && buildah bud --storage-driver=vfs --tls-verify=false -t \$(INTEGRATION_KIT_IMAGE) . && buildah push --storage-driver=vfs --digestfile=/dev/termination-log --tls-verify=false \$(INTEGRATION_KIT_IMAGE) docker://\$(INTEGRATION_KIT_IMAGE)\";0" -t builder.tasks-filter=builder,package,my-buildahYou can see in this case we have created a custom task named my-buildah. This is expecting to run the build and publish operations. We must change directory to /context/ where the package task has written a Dockerfile. We are using the INTEGRATION_KIT_IMAGE environment variable and we are writing the pushed image digest to /dev/termination-log as expected by the procedure.

depending on the strategy used you may not have a direct feature. It would suffice to add some command and just write the digest of the generated image via echo $my-image-digest > /dev/termination-log. |

Finally we filter the tasks we want to execute, making sure to include the builder and the package tasks which are provided by the operator and are in charge to do the building and the packaging.

we had to run the my-buildah task with root privileges as this is required by the default container image. We had also to override the builder.base-image as it also require a special treatment for Buildah to work. |